Checklist

A checklist for reporting crowdsourcing experiments.

View the Project on GitHub TrentoCrowdAI/crowdsourcing-checklist

Checklist for reporting crowdsourcing experiments

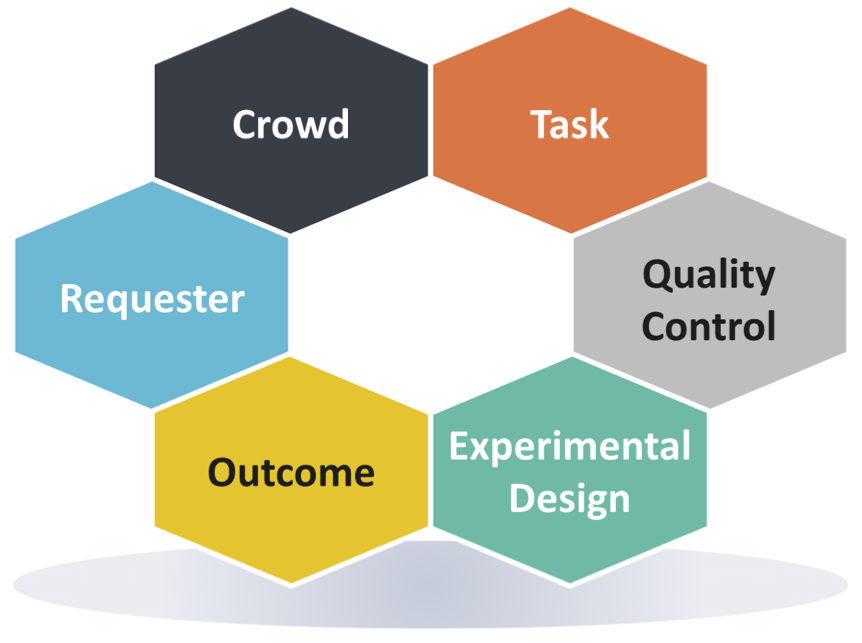

In this website, we introduce a checklist to aid authors in being thorough and systematic when describing the design and operationalization of their crowdsourcing experiments. The checklist also aims to help readers navigate and understand the underlying details behind crowdsourcing studies. By providing a checklist and depicting where the research community stands in terms of reporting practices, we expect our work to stimulate additional efforts to move the transparency agenda forward, facilitating a better assessment of the validity and reproducibility of experiments in crowdsourcing research.

Paper

Please refer to the following paper for more details of our work.

On the state of reporting in crowdsourcing experiments and a checklist to aid current practices

Jorge Ramírez+, Burcu Sayin+, Marcos Baez^, Fabio Casati+, Luca Cernuzzi◦, Boualem Benatallah△, Gianluca Demartini‡. + University of Trento, Italy. ^ Université Claude Bernard Lyon 1, France. ◦ Catholic University, Paraguay. △ University of New South Wales, Australia. ‡University of Queensland, Australia.

Abstract

Crowdsourcing is being increasingly adopted as a platform to run studies with human subjects. Running a crowdsourcing experiment involves several choices and strategies to successfully port an experimental design into an otherwise uncontrolled research environment, e.g., sampling crowd workers, mapping experimental conditions to micro-tasks, or ensure quality contributions. While several guidelines inform researchers in these choices, guidance of how and what to report from crowdsourcing experiments has been largely overlooked. If under-reported, implementation choices constitute variability sources that can affect the experiment's reproducibility and prevent a fair assessment of research outcomes. In this paper, we examine the current state of reporting of crowdsourcing experiments and offer guidance to address associated reporting issues. We start by identifying sensible implementation choices, relying on existing literature and interviews with experts, to then extensively analyze the reporting of 171 crowdsourcing experiments. Informed by this process, we propose a checklist for reporting crowdsourcing experiments.

Feedback & Support

Fill free to reach out via GitHub issues to seek support or provide feedback on using and improving the checklist.

If you use the checklist in your work please cite:

@inproceedings{RamirezCSCW2021,

author = {Jorge Ram{\'{\i}}rez and

Burcu Sayin and

Marcos Baez and

Fabio Casati and

Luca Cernuzzi and

Boualem Benatallah and

Gianluca Demartini},

title = {On the state of reporting in crowdsourcing experiments and a checklist to aid current practices},

booktitle = {Proceedings of the ACM on Human-Computer Interaction (PACM HCI), presented at the 24th ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW 2021). October 2021},

year = {2021}

}